I’ve always thought the timing of the PACE protocol changes need to be seen in the context of the earlier-finishing FINE trial.

The protocols for the FINE and PACE trials had the same criterion for improvement on the SF36PF scale (having agreed to swap protocols in a May 2003 TMG meeting). The FINE protocol confirms using the 11-point Chalder questionnaire, but does not specify what will count as improvement on that scale.

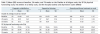

This table, from Wilshire et al 2018 (full reference below) shows the switch in criteria for improvement on the SF36PF and CFQ that was made between protocol and publication of the PACE trial ie 2007 vs 2011:

From: Wilshire CE, Kindlon T, Courtney R, Matthees A, Tuller D, Geraghty K, Levin B. Rethinking the treatment of chronic fatigue syndrome-a reanalysis and evaluation of findings from a recent major trial of graded exercise and CBT. BMC Psychol. 2018 Mar 22;6(1):6. doi: 10.1186/s40359-018-0218-3. PMID: 29562932; PMCID: PMC5863477.

On 29th April 2009, the PACE statistical analysis plan is discussed at a TSC meeting, but there is no mention of changing the criteria for improvement at this point.

On 13th May 2009 the FINE trial results were presented to the FINE TMC.

On 17 June 2009 the FINE trial results were presented to the PACE TMG.

The FINE trial results as published in 2010:

From: Wearden AJ, Dowrick C, Chew-Graham C, Bentall RP, Morriss RK, Peters S, Riste L, Richardson G, Lovell K, Dunn G; Fatigue Intervention by Nurses Evaluation (FINE) trial writing group and the FINE trial group. Nurse led, home based self help treatment for patients in primary care with chronic fatigue syndrome: randomised controlled trial. BMJ. 2010 Apr 23;340:c1777. doi: 10.1136/bmj.c1777. PMID: 20418251; PMCID: PMC2859122.

The FINE trial summarised their findings as follows:

Our study shows that, when compared with treatment as usual, pragmatic rehabilitation has a statistically significant but clinically modest beneficial effect on fatigue at the end of treatment (20 weeks), which is mostly maintained but no longer statistically significant at one year after finishing treatment (70 weeks). Pragmatic rehabilitation did not significantly improve our other primary outcome, physical functioning, at either 20 weeks or 70 weeks.

On 4 November 2009, less than 5 months after the FINE results were presented to the PACE TMG, TMG meeting minutes note changes to the analysis plan:

TSC/ASG[Redacted] fed back that the analysis strategy was close to completion. There had been a number of final issues to resolve. It was highlighted that an important change has been made to the reporting of the primary outcome measures. Previously it had been decided that the results would be presented categorically using thresholds derived from the binary scoring of the Chalder questionnaire and the continuous SF36 scale. It has since been decided that the original question posed by the study would be better answered by comparing the continuous scores on both the Chalder and SF36 scales. The originally planned categorical scores will also be reported in the main paper, as a secondary analysis, reflecting clinically important differences.

A few concerns were raised that making a change at this stage may invite criticism. It was highlighted that the change will increase the sensitivity of the study and that the changes have been made before the reviewing of any data, and that the change will be reported in the paper. It was agreed by the TMG that these changes should go ahead.[my bold]

White et al. describe the change in 2015 in

BJPsych Bull. 2015 Feb; 39(1): 24–27:

We originally planned to use a composite outcome measure of the proportions of participants who met either a 50% reduction in the outcome score or a set threshold score for improvement. However, as we prepared our detailed statistical analysis plan, we quickly realised that a composite measure would be hard to interpret, and would not allow us to answer properly our primary questions of efficacy (i.e. comparing treatment effectiveness at reducing fatigue and disability). Before any examination of outcome data was started, and after approval by our independent steering and data monitoring committees, we decided to modify our method of analysis to one that simply compared scores between treatments at follow-up, adjusting the analysis by baseline scores.