You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Use of EEfRT in the NIH study: Deep phenotyping of PI-ME/CFS, 2024, Walitt et al

- Thread starter Andy

- Start date

SunnyK

Senior Member (Voting Rights)

Exactly! And I think this must be the article I tried to refer to in my just-now post on the old Dan Neuffer book, the paper my MIL read that was mentioned in a Health Rising newsletter.For me, the fundamental problem with the “Effort Choice” task, and the interpretation of the easy task/hard task ratio in this paper, is that you could have predicted the outcome before the study. The groups do not come into this task on an equal footing.

Normal volunteers come in with nothing to lose and play a trivial financial game – a bit of fun.

Patients come in with ME/CFS and the following:

· they have learned from their own experience that regardless of how satisfying it might be to complete a task quickly, or how enticing a reward, they often have to forego these short-term satisfactions and rewards in order to manage their condition, in order to get more, smaller rewards long-term, in terms of being able to do a certain amount of activity rather than being crashed

· they are counselled by doctors and other health professionals to pace their activities, to be the tortoise and not the hare – the clinicians from whose practices these patients were drawn all, to my knowledge, advise their patients to pace

· the game might have been viewed by some ME/CFS participants as a test of whether they were pacing or whether they were somewhat reckless even when given a choice, such that the “right answer” was to choose quite a few easy tasks or achieve a balance of easy and hard tasks

· they are playing this game at some point in a week of testing which would be gruelling at any level of ME/CFS, and where their ability to pace is extremely limited – most of the time (perhaps all of the time except in this task), they just have to do the exact same as the healthy volunteers. To choose some easy tasks would have been wise, in order to increase their chances of being able to complete the week in some sort of decent shape.

For all of those reasons, I would have expected patients to choose more easy tasks than controls, regardless of whether they thought they could do the tasks in the moment or not.

So the test does not show that they thought they couldn’t do the tasks but actually they could. It shows that people with ME/CFS are coming in with a different reward and penalty system than healthy volunteers. Or more accurately, it shows nothing.

Patients are balancing these trifling rewards of $2-$8.42 with the penalty of PEM if they get it wrong, during a week when researchers are repeatedly and explicitly trying to trigger PEM. For controls, there’s no penalty.

Jonathan Edwards

Senior Member (Voting Rights)

Why does this not matter? Why is it not the main thing we are discussing? How does this not make the whole thing moot? What am i missing?

I think that is exactly right.

It is quite hard to find a clear way of expressing one's intuitive sense of 'no way' about this but that is a good one.

Another way maybe is to point out that the authors are wrong to talk of the brain deciding things. The brain is a forum within which information is passed from one part to other parts all the time. A decision on a choice will involve some component of the brain choosing but also another component of the brain in the same place presenting the chooser with the odds of better or worse to make the choice on.

An abnormality of a chooser component is a completely different thing from an abnormality of an odds presenter component - which may tell the PWME's chooser component that the pay back is going to be bad. But there is no way you can tell from brain scans which component is wrong because there is only one place where one is providing information to the other.

The fundamental problem here is that the psychologists have no understanding of the structure of the mind. My other main interest is in just that - the relational structure of thinking processes. The authors make the standard false assumptions.

Evergreen

Senior Member (Voting Rights)

Agree with everything you said. Healthy volunteers have only rewards. Patients have both rewards and penalties to consider. Even if you argue that healthy volunteers could have non-dominant arm/hand pain/stiffness the next day if they do lots of hard tasks, they also have the experience of healthy people that this will not stop them doing what they want, and will resolve nice and quickly. Whereas patients are reckoning with days/weeks of consequences both from this particular task viewed on its own, and from the cumulative effect of a week of repeated overexertion, and another to finish the study. Edit to add: the consequences of participation in the entire study could go on for months/years for some participants.Theres a glaring difference between the controls and the PwME... they are comparing tasks with a reward, and saying the groups are equal & could do the tasks as well as each other, rewards the same etc etc blah blah....

but from my POV they are missing the main point which is that for the PI-ME/CFS group there is a punishment that comes later, completely wiping out the notion of any reward and being a ready explanation for the different choices.

The reason this is not the central part of the discussion here is, in my view, that we don’t have relevant data to draw on to make the argument stronger, because PEM wasn’t measured in the effort task.

The strongest support for your argument comes from their own study of PEM after CPET. Patients reported climbing malaise after CPET, peaking 24 hours after baseline and still leaving them “much worse than baseline” at 72 hours. They didn’t measure beyond 72 hours which is a shame. We should also point to other studies that have reported PEM after tasks, ideally in submaximal tasks ie those that involve effort levels a bit more similar to the effort task.

We can point to that, and say that the EEfRT task, and specifically hard tasks within EEfRT, are likely to have been difficult enough for some pwME that they would have expected it to induce PEM, and thus that this potential penalty would have factored into their choices between hard and easy tasks. From a scientific point of view, it’s only a hypothesis – the hypothesis that patients were factoring in penalties that healthy volunteers weren’t. And this may not seem plausible to many scientists: people without PEM would find it easier to imagine PEM following CPET than PEM following repetitive finger movements. Since we don’t know what the participants were thinking, and it hasn’t been reported, the argument is left on weak ground.

Given that, the strongest way to refute their finding would be to demonstrate, with their data (or someone else’s), that something else, ie something other than pwME “avoiding” hard tasks, explains their finding better.

But the reward-penalty argument you are highlighting absolutely has to be part of any response. I would argue that it should be the first argument made.

Edited to add: the consequences of participation in the entire study could go on for months/years for some participants.

Last edited:

Kitty

Senior Member (Voting Rights)

The reason this is not the central part of the discussion here is, in my view, that we don’t have relevant data to draw on to make the argument stronger, because PEM wasn’t measured in the effort task.

I see that point entirely.

But it also works to invalidates the thing, because if they're not measuring PEM they're not measuring ME.

Effort preference, the decision to avoid the harder task when decision-making is unsupervised and reward values and probabilities of receiving a reward are standardized, was estimated using the Proportion of Hard-Task Choices (PHTC) metric. This metric summarizes performance across the entire task and reflects the significantly lower rate of hard-trial selection in PI-ME/CFS participants (Fig. 3a).

I can't see how just the probability of choosing a hard task is a measure of effort preference. For example, what's the point of choosing a hard task with a value of $1.78 and a probability of 0.12? If you are choosing lots of those low-value high-effort tasks, then your effort preference must be out of whack.

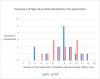

I think the number of attempted tasks with a probability adjusted value of at least $2 for each participant is a better measure, and I've called those tasks 'high value tasks'. So, for example, if the task has a value of $3.00 and a probability of 0.88, the probability adjusted value is $2.64, and it qualifies to be counted. I counted a total of 13 tasks with a probability adjusted value of $2.00 or more in the experiment.

So, I've counted the number of high value tasks attempted by each participant and made a frequency chart.

And, just looking at it, there is no difference between the controls and the CFS groups when it comes to selecting high value tasks. The CFS participants are not performing worse on value contingent effort preferences.

Now, I know the set up of the game messes with decisions and there is a lot going on, but the NIH investigators seem happy to pretend that their game design has no influence on strategy. And who knows what the participants were actually told. I'm not pretending this is perfect science, by any means. But, given what was done, how is my analysis any worse than that of Walitt et al? I feel that I must be missing something, that Walitt's hypothesis can't be based on such as silly analysis as the probability of choosing a hard task. But the charts in Figure 3 are there.

(note, there may well be errors in my chart, but I think it's basically correct)

Last edited:

Evergreen

Senior Member (Voting Rights)

I think that's why they're not attributing patients' performance to anhedonia. They're saying, it's not that patients aren't reward-driven, it's that they're driven to avoid effort.I can't see how just the probability of choosing a hard task is a measure of effort preference. For example, what's the point of choosing a hard task with a value of $1.78 and a probability of 0.12? If you are choosing lots of those low-value high-effort tasks, then your effort preference must be out of whack.

I think the number of attempted tasks with a probability adjusted value of at least $2 for each participant is a better measure, and I've called those tasks 'high value tasks'. So, for example, if the task has a value of $3.00 and a probability of 0.88, the probability adjusted value is $2.64, and it qualifies to be counted. I counted a total of 13 tasks with a probability adjusted value of $2.00 or more in the experiment.

So, I've counted the number of high value tasks attempted by each participant and made a frequency chart.

View attachment 21379

And, just looking at it, there is no difference between the controls and the CFS groups when it comes to selecting high value tasks. The CFS participants are not performing worse on value contingent effort preferences.

I can't see how just the probability of choosing a hard task is a measure of effort preference. For example, what's the point of choosing a hard task with a value of $1.78 and a probability of 0.12? If you are choosing lots of those low-value high-effort tasks, then your effort preference must be out of whack.

I think the number of attempted tasks with a probability adjusted value of at least $2 for each participant is a better measure, and I've called those tasks 'high value tasks'. So, for example, if the task has a value of $3.00 and a probability of 0.88, the probability adjusted value is $2.64, and it qualifies to be counted. I counted a total of 13 tasks with a probability adjusted value of $2.00 or more in the experiment.

So, I've counted the number of high value tasks attempted by each participant. And then I've plotted the number of participants choosing each number of those tasks.

View attachment 21379

And, just looking at it, there is no difference between the controls and the CFS groups when it comes to selecting high value tasks. The CFS participants are not performing worse on value contingent effort preferences.

Now, I know the set up of the game messes with decisions and there is a lot going on, but the NIH investigators seem happy to pretend that their game design has no influence on strategy. And who knows what the participants were actually told. I'm not pretending this is perfect science, by any means. But, given what was done, how is my analysis any worse than those of Walitt et al? I feel that I must be missing something, that Walitt's hypothesis can't be based on such as silly analysis as the probability of choosing a hard task. But the charts in Figure 3 are there.

(note, there may well be errors in my chart, but I think it's basically correct)

The point of the EEfRT is to somehow magically capture intrinisic psychological motivation phenomena, not strategies.

But given the psychology of the game and that not everybody will understand it in it's full depth, there's still a trade off with people saying no to a low probability task even though there is no intrinisic reason to do so, so it does capture some psychological and or biological phenomena, whatever these may be.

That's why the authors state that the patients aren't driven by potential reward, rather than that they are driven by a general lack of effort, so to speak in Walitts analysis. The EEfRT is supposed to remove strategies and supposed to capture some intrinsic nature of people, the nature the authors argue was captured here is that pwME prefer to go hard a bit less often.

Last edited:

echnically the probability that is assigned to a task should not matter at all for a healthy person (assuming finger fatigue doesn't crucially matter). It should be irrelevant to them whether a task with $4.00 has a probability of 0.12 or 0.88 because in either case it's a "free lunch" and since they don't have an energy limiting condition it really shouldn't matter if they spend energy on a task even if the probability is low, it's always a "free lunch" no matter what the probability is, what matters is the size of the reward. An example from everyday life would be given that chance to play in the lottery for free, anybody (that is keen on winning some money) sensible would have to say yes.

I don't think that's right. First because we believe that the participants were told that only two rewards would be randomly selected. And second, even if the participants didn't really understand the consequences of that payout structure, the experiment was only 15 minutes long. If you do the low value hard tasks, you are using up time. You could instead do a quick easy task, give your non-dominant little finger a break, and then roll the dice for the chance of a better reward in the next task. There's no point doing a long high effort task with a probability of 0.12 and a value of 1.24, for example.

Last edited:

I don't think that's right. First because we believe that the participants were told that only two rewards would be randomly selected. And second, because the experiment was only 15 minutes long. If you do the low value tasks, you are using up time. You could instead do a quick easy task and then roll the dice for the chance of a better reward in the next task. There's absolutely no point doing a long high effort task with a probability of 0.12, it will always give you a probability adjusted reward of a lot less than the $1.00 you could get for doing the fast easy task.

Yes, that's true. Haha, I had actually thought about exactly that before against the counterargument I proposed above when I thought about it for the first time a couple of days ago, but then forgot about it again. You are of course right. I will edit my comment above.

Last edited:

Eddie

Senior Member (Voting Rights)

I don't think that's right. First because we believe that the participants were told that only two rewards would be randomly selected. And second, even if the participants didn't really understand the consequences of that payout structure, the experiment was only 15 minutes long. If you do the low value hard tasks, you are using up time. You could instead do a quick easy task and then roll the dice for the chance of a better reward in the next task. There's no point doing a long high effort task with a probability of 0.12 and a value of 1.24, or even 4.00, for example.

Or even better, do the quick easy task and fail it so it doesn't go into the pool of rewards

I don't think that's right. First because we believe that the participants were told that only two rewards would be randomly selected. And second, even if the participants didn't really understand the consequences of that payout structure, the experiment was only 15 minutes long. If you do the low value hard tasks, you are using up time. You could instead do a quick easy task and then roll the dice for the chance of a better reward in the next task. There's no point doing a long high effort task with a probability of 0.12 and a value of 1.24, for example.

Something I did want to re-mention about these findings an the reward drive which is also what your analysis is about and which is something I wrote about a very early comment here and which is something others have also talked about is that in a EEfRT study you measure a tremendous amount of things so it's very likely you'll find one thing that is statistically significant, but then can't be reproduced in further studies (which is exactly what happens in a lot of studies) and that you can put any twist on the results that you want to.

That is why the conclusion of these results could have been precisely the opposite as well and the kind of analysis you, myself and other people have done on here has been used to do exactly that and there's precidence for it in other studies "Thus, individuals with schizophrenia displayed inefficient effort allocation for trials in which it would be most advantageous to put forth more effort, as well as trials when it would appear strategic to conserve effort.", i.e. it can be just as well be argued that going for hard more often, but without it being in the "right moment" shows an inefficiency effort allocation, i.e. if one could show this via a rigorous analysis one could show that pwME have a higher functioning effort allocation rather than anything else.

Well, I've been thinking about what you wrote, and thinking that you are right. It depends what you do. Yeah, ideally as Eddie says, you'd just non-complete the easy tasks and get on to the high value hard tasks. If you don't believe that you can intentionally not complete a task, it is probably better to do less of the easy $1 tasks, and you'd be ok with some tasks not producing winnings.Yes, that's true. Haha, I had actually thought about exactly that before against the counterargument I proposed above when I thought about it for the first time a couple of days ago, but then forgot about it again. You are of course right. I will edit my comment above.

Nothing.Theres a glaring difference between the controls and the PwME... they are comparing tasks with a reward, and saying the groups are equal & could do the tasks as well as each other, rewards the same etc etc blah blah....

but from my POV they are missing the main point which is that for the PI-ME/CFS group there is a punishment that comes later, completely wiping out the notion of any reward and being a ready explanation for the different choices....

Its not a true comparison....

What am i missing?

They did not control for that critical factor.

Just another example of the problem of inadequate (and sometimes non-existent) control that saturates this area of medicine. It is almost the defining characteristic of it now.

Last edited:

On the fatigue question, just looking at the spreadsheet, it looks like people with ME/CFS were more likely to not attempt hard tasks back to back. I think that is probably because of muscle fatigue from repeated use within a task. Resting the finger by doing an alternative task then allowed them to again function at a similar tapping speed. So, I wouldn't say that evidence of in-game fatigue didn't differ between the cohorts. I wonder if that decreased willingness/ability to do hard tasks back to back due to fatiguability would account for the difference in proportions of hard tasks selected.

(The study found evidence of fatiguability in the repeat hand grip test, so we know that muscle fatiguability was present in the ME/CFS cohort.)

Yes, I thought about that too and proposed precisely that in this comment:

Something we haven't analysed yet is whether pwME have to take breaks to be able to do hard tasks, i.e. whether there are more prominent features of high and low number of clicks that alternate and whether these dominate the lower mean behaviour more than ME/CFS vs HV does. I'll try to explain why it might be worthy of a look (even if it reveals nothing). Let's look at the plot for the first 35 rounds and only look at the hard rounds, i.e.

View attachment 21359

On the HV side the larger mean i.e. "higher effort preference" is driven by HV H, HV P and HV O, these people simply don't exist on the ME/CFS side of things. The highest performer (in terms of opting for hard games) on the ME/CFS side of things is HV D and you might not expect him to get close to those high performers in the HV group because he's one of 2 people with the least ability to complete the rounds, so it's possible that he has to take breaks. The question then is why there aren't pwME that can go hard and repetively go hard, i.e. why ME/CFS C, K & F choose far less often to go for hard then HV H, HV P and HV O, what about the other ME/CFS patients with a 100% success rate on hard trials? If that is due to a necessity of having to take breaks in-between rounds or is it a different strategy (I don't think all of these people are trying to optimize their pay-out) and if you look at the comparison of first half vs second half you will see that this difference only starts increasing in the second half so it could be fatigue driven.

I think it also makes sense to look in the literature of EEfRT studies to see how common people such as HV H, HV P and HV O usually are amongst healthy people. I'd guess they are very common (especially because in some trials people are told different instructions to ensure people don't follow strategies that end-up minimising play time on hard rounds), because I think many people will go hard if they can and if they haven't really understood what the better choice is in terms of end-rewards, but you never know, perhaps these are outliers (but I don't think so).

However, when I began looking into it nothing too fruitful came off it, at least at a first glance with a basic analysis. The variance amongst the pwME is quite high so you also have pwME choosing to go hard-hard-hard-hard-easy-easy-easy-easy-hard-hard-hard which destroys more obvious general patterns which would be indicative of "energy conservation" or "breaks". Maybe a less basic analysis could reveal something, but I really have no idea and also don't know how strong of an argument it'll end up being rather than being in support of "effort preference".

Last edited:

Evergreen

Senior Member (Voting Rights)

Definitely a possibility. In the repetitive grip testing by functional imaging they report:I wonder if blood flow to the brain had any impact on the results. There is at least some good evidence to suggest that, when upright, blood flow to the brain is reduced in pw ME/CFS. Surely this would impact decision making as time goes on. I assume no effort was made to determine if this played a role.

Maybe reduced blood flow to the brain could be what causes pwME to stop being able to exert?We also assessed changes across blocks with a two-way ANOVA (2 groups × 4 blocks), which showed that blood oxygen level dependent (BOLD) signal of PI-ME/CFS participants decreased across blocks bilaterally in temporo-parietal junction (TPJ) and superior parietal lobule, and right temporal gyrus in contradistinction to the increase observed in HVs (F (3,45) = 5.4, voxel threshold p ≤ 0.01, corrected for multiple comparisons p ≤ 0.05, k > 65; Fig. 4d, e). TPJ activity is inversely correlated with the match between willed action and the produced movement.

Fwiw, I had cerebral blood flow measured during a tilt test and it was supposedly fine. (I fainted quite spectacularly, though only at 32 mins.) The sensors were only place on the left side of my head, though - I don't know if they would have picked up reduced flow on either side or only on the left side. I was disappointed as I get all kinds of weird sensations on the right side of my head - tingling etc - and absolutely none on the left. My understanding is that there are a number of different ways to measure cerebral blood flow. I have no idea if the method used would be considered a good one or not.

Agree.they keep claiming in their communication about the study that effort preference was related to decreased activation in the right TPJ but as far as I can tell, they didn't actually test for this relationship. There's no statistical test reported that shows that these are related. They are just asserting that they are because they found both of them in the same cohort[my bolding]

The closest I can find in the paper is this:If it takes that many words to explain, it suggests to me they don't really know what they mean. Have either Nath or Walitt actually produced a succinct definition of effort preference?

In my view that describes a voluntary behaviour. But as lots of us have been pointing out, they're thinking of fatigue only as something that isn't there at the beginning of a task and might develop during it, not as what pwME come into each task with.Effort preference is how much effort a person subjectively wants to exert. It is often seen as a trade-off between the energy needed to do a task versus the reward for having tried to do it successfully. If there is developing fatigue, the effort will have to increase, and the effort:benefit ratio will increase, perhaps to the point where a person will prefer to lose a reward than to exert effort. Thus, as fatigue develops, failure can occur because of depletion of capacity or an unfavorable preference

Agree, there must be a reason why some studies started doing individual calibration. @andrewkq , am interested to know if you have any thoughts on this (which I wrote above in reply to @EndME 's good point):Yeah I think they would likely push back on it being an arbitrary choice. It could be something to look for in the other EEfRT studies, whether others pick a cut point for what's considered an adequate completion rate.

Alternatively, you could say =100% vs <100, and the percentages would be 45% and 35%, and check that. I suggested above and below 90% because it seemed more reasonable clinically to allow healthy volunteers to not be perfect (but still not lose much confidence, thus not affecting their decision much to choose between hard or easy), since 3/16 were in the 90-95% range (one patient was also in that range). That group of 4 who complete hard tasks successfully 90-95% of the time has an intermediate proportion of hard tasks completed/hard tasks chosen of 40%.

So you could look at it as 3 groups:

45% hard tasks chosen (=100% completed successfully, n=16 including 5 patients)

40% hard tasks chosen (90-99% completed successfully, n=4 including 1 patient)

33% hard tasks chosen (0-89% completed successfully, n=11 including 2 HVs)

I didn't think arguing a dose-response kind of relationship on the basis of 4 people would be a strong argument but others may think differently. I thought dividing into 2 groups with as much of a mix of HVs and patients as possible was the best option.

The argument would be that when people find they're failing some hard tasks, it factors into their choice between hard and easy tasks, after the probability of the reward, and alongside the value of the reward (reading from @andrewkq 's analysis). And there might be a small amount that they consider reasonable to fail without it factoring into their choice much or at all. And then to explain why some people hurl themselves at it repeatedly despite all the failure, I would say, they're playing a game, they know what they've been told to do and they're doing it, and/or they remain reward-driven, whether that's the small monetary win or the approval of testers. Resilience, innit. Or some such.

Thank you so much for explaining all of that. Much appreciated.It stands for Generalized Estimating Equation...

Agree. Would be nice if some of the literature touched on these points, I haven't been able to delve in yet...I would like to know if the investigators agree that the performance of around half of the ME/CFS cohort actually did not look much different to most of the healthy controls, and how they explain that, in terms their theory that reduced effort preference is the core problem in ME/CFS.

And also, what sort of performance they would expect from people who are physically incapable of completing the hard task.

Yep, possible. Based on Walitt's pre-existing views on CFS, and the fear-avoidance/deconditioning model, I'd say this was pre-hoc.They tested all of the interactions that the task was designed to tease out (which have actual theoretical justifications), but they were all non-significant, so they invented a new hypothesis post-hoc based around the one significant result they dredged up.

Exactly.But that means they only looked at fatigue caused by their 15 min clicking test, not by fatigue caused by a chronic illness that was already there at the start of the clicking test.

Exactly.A good point. I think there may be a confusion between 'fatigue' in. the sense of fatiguing during a task series and the subjective symptom loosely known as fatigue. They are totally different things.

Agree.I think that this is as important and valid a critique as any of the methodological flaws we've found. I think this alone should be a letter to the editor, the task should never have been used in this way because it cannot isolate preference (this is also why the question is a useless question). For me, I just doubt that the authors are going to engage in a discussion of theory with any intellectual integrity when they've already demonstrated that they have no problems with such glaring contradictions, so my hope is that a methodological critique will be harder to ignore.

Great find. I think it's very relevant - any evidence that pwME could have been expected to enter this task at a motoric disadvantage compared to the healthy volunteers supports the idea that the task as operationalised by Walitt et al could not assess preference without individual calibration.I don't want to divert this thread, but I wonder whether this study has any relevance here:

People with Long Covid and ME/CFS Exhibit Similarly Impaired Dexterity and Bimanual Coordination: A Case-Case-Control Study, 2024, Sanal-Hayes

Evergreen

Senior Member (Voting Rights)

Have you been fraternising with HV F?Or even better, do the quick easy task and fail it so it doesn't go into the pool of rewards

Yes, I came to the same conclusion - there didn't seem to be much difference in a tendency to do back to back tasks.However, when I began looking into it nothing too fruitful came off it, at least at a first glance with a basic analysis.

Kitty

Senior Member (Voting Rights)

in a EEfRT study you measure a tremendous amount of things so it's very likely you'll find one thing that is statistically significant, but then can't be reproduced in further studies

Guess that doesn't matter, if you can get more grants to try.

JemPD

Senior Member (Voting Rights)

Evergreen thank you sooo much for your detailed response.

You cant disprove A by presenting B which is also unproven.

I am neither physically or emotionally robust enough to write letters, nor do i have the professional scientific language to do so, but i am so grateful for all the work that is going into all this analysis, i cant understand most of it as am too cognitively impaired at moment, all the maths and charts etc are completely opaque to me. But i am massively grateful that others are doing it, and i sacrificing their time & energy to do so.

Thank you all for hearing me and explaining, glad to know i not way of the mark

This makes to sense to me, you cant prove one hypothesis wrong with another hypothesis, no matter how obviously correct it might seem to those who have lived experience of the thing occurring. It is too easy to break down.From a scientific point of view, it’s only a hypothesis – the hypothesis that patients were factoring in penalties that healthy volunteers weren’t. And this may not seem plausible to many scientists:

You cant disprove A by presenting B which is also unproven.

I am neither physically or emotionally robust enough to write letters, nor do i have the professional scientific language to do so, but i am so grateful for all the work that is going into all this analysis, i cant understand most of it as am too cognitively impaired at moment, all the maths and charts etc are completely opaque to me. But i am massively grateful that others are doing it, and i sacrificing their time & energy to do so.

Thank you all for hearing me and explaining, glad to know i not way of the mark