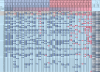

OK I spoke to soon of course. I forgot about the warm-up rounds. SO the following tables exclude the warm-ups/trials with a '- number' ANd also excludes HVF (with the info if they had been included on the right)

View attachment 21295

Which make for the following calcs by 'person' (15 ME-CFS participants, 16 HVs):

No hard/person ME/CFS 0.5 level: 4.67

No hard/person ME/CFS 0.88 level: 10.00

both med+highlevel: 14.67

No hard/person HV 0.5 level: 6.38

No hard/person HV 0.88 level: 10.13

both med+high level: 16.5

This actually brings out what looks like quite a big % difference at the low-probability level (hard chosen 12.55% by ME-CFS and 18.41% by HVs at this level), even though the numbers behind it are actually small (HV chose 44 hard, ME-CFS 29 = 15). It equates to around 1 more hard choice per person though?

And a 2.5% difference at the high probability level, but underneath that is only 12 hard choices.

I've done the calculations per person because of the difference in 'N' number of participants in each group. At the high probability level, the difference in number of hard selected per person is 10 (ME-CFS) vs 10.13

At the 50:50 probability level it is 1.71 hard choices per person difference: 4.67 were chosen as 'hard' for ME-CFS and 6.38 for HVs (out of around an average of 16 tasks at that level to choose either easy or hard from)