Below I will be presenting my first collection of plots. For the largest part this is what the brilliant plots

@Murph,

@bobbler and

@Karen Kirke made have already shown. All of these plots and calculations plots were done in R, so the colours, formatting and presentation can easily be changed and these plots, code and calculations (mean, SD etc) are suitable for being published if the appropriate changes are made.

I will be presenting every plot once for all the participants and once excluding HV F (his data is pretty irrelevant for what will be presented here but I've included it anyways), the same applies to the calculations. All of these plots are centered around the completion rates of hard rounds, easy rounds and their completion rates have been ignored for now.

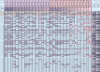

The first plot is similar to what

@Karen Kirke first presented, but I chose a slightly different graphical presentation and also included mean values (red is for ME/CFS, blue is for HV and dotted lines are mean values for successful completion whilst the continuous line are the mean number of hard trials), that I believe already tell us a lot (

@Simon M first brought up the idea of including these I believe, I have sticked to ranking them alphabetically for now but will still look at whether ranking them by things such as SF36 could reveal something interesting). The ratio of the different colours directly shows the percentages of hard rounds successfully completed, instead of this being a separate piece of information.

View attachment 21308

The same applies when HV F is excluded, see below.

View attachment 21309

These plots clearly indicate the massive difference between the percentage of pwME and HV that complete hard tasks. Note also have far away pwME stray from the mean, there is a lot of deviation. This had all been discussed at length already.

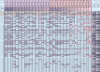

The second round of plots are now for the analogous plots from above, however only for first 35 rounds of trials (excluding the practice rounds). These plots are more "interesting" than the plots above as they ensure that all participants have played the same number of rounds and also limit in-game fatiguing effects. Focusing on a fixed amount of rounds has been done in several different EEfRT studies, the choice of 35 is somewhat arbitrary and varies from paper to paper (large enough to be significant and not too large for in-game fatigue to occur).

View attachment 21310

The same applies when HV F is excluded, see below.

View attachment 21311

What we see here is just as striking as what we had already seen before.

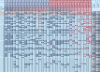

Now come the plots of the trial rounds:

View attachment 21312

The same applies when HV F is excluded, see below.

View attachment 21313

This plot clearly shows us the fundamental problem in the trial. In the trial rounds fatigue caused purely by the game and motivation/stragety will play less of a role. Yet pwME cannot complete the tasks. We cannot know whether the results of the study would have been different if trials rounds would have served as calibration rounds, however failing to use them as such means we cannot appropriately interpret the results of the EEfRT. Note here that pwME actually choose the hard task more often then the controls. This is the only time this is the case. Unfortuntely, due to the small sample size it's hard to conclude anything from that apart from the fact that not appropriately calibrating the EEfRT has led to inconclusive results. The median of HV playing hard rounds during the trial rounds appears to be proportional to that in the first 35 rounds.

Finally I have plotted the hard rounds as the game progresses divided into two halves, the first half of total rounds played per player and the second half (i.e. player HV A plays in total 48 rounds so the first half are the first 24 rounds and the second half the next 24 rounds and then we look at the hard rounds in those in those halves separately, if someone plays an uneven number of rounds then this extra round is part of the first half of the game).

View attachment 21314

View attachment 21315

I believe this hasn't been plotted or discussed yet at all. It strongly suggests that the mix of learning effects+saturation effects+fatigue as the rounds progress actually dominate the completion of hard tasks in HV more then they do in pwME. Both are still opting to go for hard rounds just as much as in the beginning of the game, but even HV are completing them less often now. This has been seen in several other studies and is one of the reason a universal cut-off is typically used in combination with using trial rounds as calibration.

In terms of completing hard tasks: After having played the first half rounds HV are now closer to the same point pwME we at, at the beginning of the game. Would their behaviours eventually converge? We would need far more data to know more about that....

The same naturally applies if HV F is excluded (see below).

View attachment 21316 View attachment 21317

In a next collection of plots I'll consider the above data under the aspect of the SF36 data of the participants and also the different reward and probability levels of hard trials.