You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Use of EEfRT in the NIH study: Deep phenotyping of PI-ME/CFS, 2024, Walitt et al

- Thread starter Andy

- Start date

Is the virtual reward possibly what the participants theoretically won based on all the tasks while the actual reward is the payout selected from two random tasks? (I might be misunderstanding it)

I don't know but I think I'm going to get the play the 2022 version of the game tomorrow, i'll let you know if it denotes a separate "virtual reward" for the total at the end.

Karen Kirke

Senior Member (Voting Rights)

You sign a user agreement that says you cannot share the data except with others registered with mapMECFS. So you can analyse the data and share the results of that analysis, but not the data. My account came through within a day, so you shouldn't be waiting long.My mapMECFS.org account is pending. Can you share the rewards chart here?

Karen Kirke

Senior Member (Voting Rights)

Yes, you're right.Is the virtual reward possibly what the participants theoretically won based on all the tasks while the actual reward is the payout selected from two random tasks? (I might be misunderstanding it)

Karen Kirke

Senior Member (Voting Rights)

From part 2 of Jeanette's blog:

Does the part in brackets mean that her analysis of virtual rewards won excludes the five patients she argues should have been excluded? Ns would be helpful.[This] does not change the fact that the groups essentially performed the same regarding the virtual rewards won (with the proper exclusion of those patients who were unable to complete hard tasks at a valid rate or at all).

ME/CFS Science Blog

Senior Member (Voting Rights)

What do you mean by PHTC?Apologies if this has already been addressed. I am still reading through and on page 18 of 35. Was there ever a chart of all the participants "PHTC" posted?

ME/CFS Science Blog

Senior Member (Voting Rights)

I think so. Without excluding those 5, the controls would have a higher amount of 'virtual rewards':Does the part in brackets mean that her analysis of virtual rewards won excludes the five patients she argues should have been excluded? Ns would be helpful.

https://www.s4me.info/threads/use-o...s-2024-walitt-et-al.37463/page-33#post-537825

Karen Kirke

Senior Member (Voting Rights)

Very helpful post. I had seen it, and then apparently erased it from my brain.I think so. Without excluding those 5, the controls would have a higher amount of 'virtual rewards':

https://www.s4me.info/threads/use-o...s-2024-walitt-et-al.37463/page-33#post-537825

Below are also the proportion of hard efforts per participant. The original study used a duration 20 minutes and only looked at the first 50 trials to make the analysis more consistent. In the Walitt the trial duration was reduced to 15 minutes, so few patients reached 50 trials.

"Effort preference, the decision to avoid the harder task when decision-making is unsupervised and reward values and probabilities of receiving a reward are standardized, was estimated using the Proportion of Hard-Task Choices (PHTC) metric.

Is this the same thing as this chart re: proportion of hard trials?

Isn't this the key rebuttal? As in, if the effort preference of a good chunk of ME patients is no different to the healthies (and in many cases exceeds them), then it can't be a central, defining feature of ME.

.

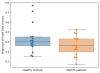

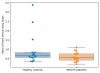

Did anyone make a chart of this? In my view Sam is exactly right and IMO a box and whisker chart for this would be perfect. How can the key difference between HV and ME/CFS be effort preference if there is a huge overlap in the scatter plot.

ME/CFS Science Blog

Senior Member (Voting Rights)

"Effort preference, the decision to avoid the harder task when decision-making is unsupervised and reward values and probabilities of receiving a reward are standardized, was estimated using the Proportion of Hard-Task Choices (PHTC) metric.

Is this the same thing as this chart re: proportion of hard trials?

Did anyone make a chart of this? In my view Sam is exactly right and IMO a box and whisker chart for this would be perfect. How can the key difference between HV and ME/CFS be effort preference if there is a huge overlap in the scatter plot.

View attachment 22345

View attachment 22344

Below are some graphs from earlier posts. @Murph and @ME/CFS Skeptic also made some graphs, I also posted some other graphs elsewhere, so did some others, it was all somewhat earlier in this thread.

Below I will be presenting my first collection of plots. For the largest part this is what the brilliant plots @Murph, @bobbler and @Karen Kirke made have already shown. All of these plots and calculations plots were done in R, so the colours, formatting and presentation can easily be changed and these plots, code and calculations (mean, SD etc) are suitable for being published if the appropriate changes are made.

I will be presenting every plot once for all the participants and once excluding HV F (his data is pretty irrelevant for what will be presented here but I've included it anyways), the same applies to the calculations. All of these plots are centered around the completion rates of hard rounds, easy rounds and their completion rates have been ignored for now.

The first plot is similar to what @Karen Kirke first presented, but I chose a slightly different graphical presentation and also included mean values (red is for ME/CFS, blue is for HV and dotted lines are mean values for successful completion whilst the continuous line are the mean number of hard trials), that I believe already tell us a lot (@Simon M first brought up the idea of including these I believe, I have sticked to ranking them alphabetically for now but will still look at whether ranking them by things such as SF36 could reveal something interesting). The ratio of the different colours directly shows the percentages of hard rounds successfully completed, instead of this being a separate piece of information.

View attachment 21308

The same applies when HV F is excluded, see below.

View attachment 21309

These plots clearly indicate the massive difference between the percentage of pwME and HV that complete hard tasks. Note also have far away pwME stray from the mean, there is a lot of deviation. This had all been discussed at length already.

The second round of plots are now for the analogous plots from above, however only for first 35 rounds of trials (excluding the practice rounds). These plots are more "interesting" than the plots above as they ensure that all participants have played the same number of rounds and also limit in-game fatiguing effects. Focusing on a fixed amount of rounds has been done in several different EEfRT studies, the choice of 35 is somewhat arbitrary and varies from paper to paper (large enough to be significant and not too large for in-game fatigue to occur).

View attachment 21310

The same applies when HV F is excluded, see below.

View attachment 21311

What we see here is just as striking as what we had already seen before.

Now come the plots of the trial rounds:

View attachment 21312

The same applies when HV F is excluded, see below.

View attachment 21313

This plot clearly shows us the fundamental problem in the trial. In the trial rounds fatigue caused purely by the game and motivation/stragety will play less of a role. Yet pwME cannot complete the tasks. We cannot know whether the results of the study would have been different if trials rounds would have served as calibration rounds, however failing to use them as such means we cannot appropriately interpret the results of the EEfRT. Note here that pwME actually choose the hard task more often then the controls. This is the only time this is the case. Unfortuntely, due to the small sample size it's hard to conclude anything from that apart from the fact that not appropriately calibrating the EEfRT has led to inconclusive results. The median of HV playing hard rounds during the trial rounds appears to be proportional to that in the first 35 rounds.

Finally I have plotted the hard rounds as the game progresses divided into two halves, the first half of total rounds played per player and the second half (i.e. player HV A plays in total 48 rounds so the first half are the first 24 rounds and the second half the next 24 rounds and then we look at the hard rounds in those in those halves separately, if someone plays an uneven number of rounds then this extra round is part of the first half of the game). The behaviour of these plots might be dominated by people who play more rounds, I will later plot this using a cut-off so that everyones halves are equally big.

View attachment 21314

View attachment 21315

I believe this hasn't been plotted or discussed yet at all. It strongly suggests that the mix of learning effects+saturation effects+fatigue as the rounds progress actually dominate the completion of hard tasks in HV more then they do in pwME. Both are still opting to go for hard rounds just as much as in the beginning of the game, but even HV are completing them less often now. This has been seen in several other studies and is one of the reason a universal cut-off is typically used in combination with using trial rounds as calibration.

In terms of completing hard tasks: After having played the first half rounds HV are now closer to the same point pwME we at, at the beginning of the game. Would their behaviours eventually converge? We would need far more data to know more about that....

The same naturally applies if HV F is excluded (see below).

View attachment 21316 View attachment 21317

In a next collection of plots I'll consider the above data under the aspect of the SF36 data of the participants and also the different reward and probability levels of hard trials.

Yes! is this calculation "The primary measure of the EEfRT task is Proportion of Hard Task Choices (effort preference). This behavioral measure is the ratio of the number of times the hard task was selected compared to the number of times the easy task was selected. This metric is used to estimate effort preference, the decision to avoid the harder task when decision-making is unsupervised and reward values and probabilities of receiving a reward are standardized." ?

or is it the number of hard task selected compared to the total number of trials?

I believe I messed that up in the chart I created using your previous data.

ME/CFS Science Blog

Senior Member (Voting Rights)

There's also this graph that shows the mean of hard task choices per group as the trials progressed, originally posted here:

https://www.s4me.info/threads/use-o...s-2024-walitt-et-al.37463/page-24#post-520344

https://www.s4me.info/threads/use-o...s-2024-walitt-et-al.37463/page-24#post-520344

ME/CFS Science Blog

Senior Member (Voting Rights)

No I initially used the number of hard task divided by the total number of trials because that is easier to interpret.it the number of hard task selected compared to the total number of trials?

I suspect they use this ratio because odds are easier to work with in statistical modelling but when plotting the raw data I think that dividing by the total number of trials is more straightforward (compared to the ratio of hard/easy tasks).

If I try to plot the ratio it looks like this:

Note that this is not exactly the same as their primary outcome measure because the latter refers to the result of statistical modelling that takes sex, reward, probability of reward, trial and expected value into account. @andrewkq, I and others tried to replicate their modelling and got similar but not identical results.

Last edited:

No I initially used the number of hard task divided by the total number of trials because that is easier to interpret.

I suspect they use this ratio because odds are easier to work with in statistical modelling but when plotting the raw data I think that dividing by the total number of trials is more straightforward (compared to the ratio of hard/easy tasks).

If I try to plot the ratio it looks like this:

View attachment 22350

Note that this is not exactly the same as their primary outcome measure because the latter refers to the result of statistical modelling that takes sex, reward, probability of reward, trial and expected value into account. @andrewkq, I and others tried to replicate their modelling and got similar but not identical results.

Are "choice times" of 5, removed? As those weren't choices, but selected by the computer due to no input. This appears to occur 22 times.

ME/CFS Science Blog

Senior Member (Voting Rights)

In the data and graphs I used, no. I suspect Walitt et al. did not exclude them either because they don't mention this in the paper and the data sheet says that those with 5s choice time had valid data (Valid Data_is_1 = 1). If I remember correctly, Treadway et al. said that these should be removed though.Are "choice times" of 5, removed? As those weren't choices, but selected by the computer due to no input. This appears to occur 22 times.

Don't think this is an important point because only 22 out of the 1441 rows had a choice time of 5. I've redone the analysis and graph above without those with a choice time of 5s and it seems to have no influence.

FYI the person processing my FOIA claim sent me this today: 'My understanding based on your descriptions is that you are concerned about potential research misconduct in the intramural study, and were looking at Dr. Walitt because he was the lead researcher. In consulting NINDS, we learned that Brian Walitt did not administer the EEfRT task. Brian Walitt also did not develop the version of the test used in the study. Nor did Brian Walitt conduct most of the data analysis. Results were reviewed and analyses were refined in discussions with Brian Walitt and others.'

(I know this could be a smokescreen, but sharing)

Someone decided to make this the 3rd sentence of the abstract. "Among the many physical and cognitive complaints, one defining feature of PI-ME/CFS was an alteration of effort preference, rather than physical or central fatigue, due to dysfunction of integrative brain regions potentially associated with central catechol pathway dysregulation, with consequences on autonomic functioning and physical conditioning."

and then go on to try to correlate "effort preference" with every other variable in the study....

I think that's the bit about it I find most alarmingly unscientific. They did one inappropriate test not validated for pwME on a tiny set of pwME and controls, and extrapolated their misinterpretation of the results as if it explained everything about ME/CFS. I don't get how this passed peer review.Someone decided to make this the 3rd sentence of the abstract. "Among the many physical and cognitive complaints, one defining feature of PI-ME/CFS was an alteration of effort preference, rather than physical or central fatigue, due to dysfunction of integrative brain regions potentially associated with central catechol pathway dysregulation, with consequences on autonomic functioning and physical conditioning."

and then go on to try to correlate "effort preference" with every other variable in the study....