For the first two trials, the participant was instructed to choose the easy and hard task respectively, in order to gain familiarity with the level of effort required for each task. For the last two practice trials, the subject was free to choose.

Thanks for sharing Binkie. This type of *directed* practice process is not described in NIH paper. (No practice is described at all.- Not that I can find.) Nor does the data reflect this direction. Rather, it looks like the participant was free to choose during the early trials. (See notes and table, below.)

Some relevant notes:

(Note about my notes: I did best I could to double check my work - but likely a few errors remain. I think the general ideas could still be relevant to discussion.)

Total time, 15 minute limit, practice trials

Suspicions about the starting trial led me to investigate time.

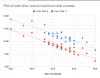

Chart below: Total time (minutes) versus maximum trial number for each participant

- Total time (min) = ((choice time + completion time )/60) total all trials, each participant

- Maximum trial is the highest trial a participant reaches.

Blue includes time of practice trials, but does not add the 4 practice trials to the maximum trial number. Red is the trials, starting from Trial 1.

The tight correlation with max trial number and total time is consistent with a 7 second overhead for each trial, 2 - 1 second screens* and 2 - 2.5 second screens* (?) When looking at the variability it may be important to note the maximum total time here is 26 seconds, average 12.5.

(*See methodology p 18/19 of paper, time of the last two screens is not included in the paper, even though every other part is in detail.)

It is not clear at what point the trial is stopped. Is the last trial started before 15 minutes and allowed to complete? Thank you

@ME/CFS Skeptic )

PwME/CFS may "Prefer" More Effort in Practice and First Trials

PwME/CFS may "Prefer" More Effort in Practice and First Trials

I'm very curious about whether all of these "practice trials" happened before the 15 minutes start. And wonder what happened in them. During these practice trials, PI_ME/CFS "chose" harder trials more often, overall than HV's. (If they are following instructions, why were they instructed to choose more hard trials than HV's? (This would leave them more fatigued at the beginning of Trial 1...)

Trial 1: Error in Paper

HV: 0.19 chose hard, 0.81 avoid hard,

PI: 0.4 chose hard, 0.6 avoid hard

This represents an (OR = 0.74) versus paper says (OR = 1.6) at start of trial.

(See notes for figure 3a.)

No adjustment is needed for prize value, probability or trial number because it is consistent for all participants. Not adjusted for sex but 43% of HV are male versus 40% PI ME/CFS, favoring HV.

@ME/CFS Skeptic pointed this out earlier. (Ps I may be calculating OR incorrectly. But at least directionally, it should be correct here.)

for Trial 1, patients (6 out of 15) chose hard tasks more often than controls (3 out of 13). So this would result in an odds ratio of 0.34 instead of 1.65.

PwME “win” trials -4 through -1:

HV: participants chose hard task 0.44 vs, 0.56 choosing easy task

PV: 0.52, 0,48

OR = 0.86

While value and probability of reward are same for each participant in a given trial, the differences between trials could skew this result. (And as mentioned above if these were directed there are issue with that.)

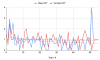

This table shows Probability Hard Task is Chosen (PHTC) , OR for first six Trials, including trials immediately before 15 minute timer starts:

In five of the first six trials, PwME/CFS have higher “effort preference” than healthy volunteers.

Again none of these comparisons adjust for sex, but favor HV’s. So, if adjusted for sex PHTC would increase and OR would lower by small fraction.

Task induced Fatigue

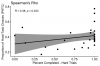

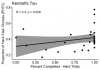

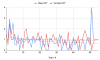

If we include practice trials, there are a few metrics that look indicate task fatigue is greater in PI-ME/CFS (which walitt will, of course, deny). For example I tried to plot OR, but accidentally used probability of hard task choices instead of probability of avoiding hard tasks.

This is the first information I looked at once I realized each trial had same variables for each participant. (apples to apples comparison)

NOTE WELL: This INCLUDES practice trials.

ALSO NOTE: This is not (OR) - I accidentally used percent of hard tasks chosen instead of easy tasks. Greater than 1 means PI-ME/CFS "prefer effort" more than HV's.

(repeating: NOTE WELL: This chart INCLUDES practice trials - (Trial -4 = 0 Discussion to follow on this if anyone is interested in pursuing this line of investigation.)

ALSO NOTE: this is not OR - I accidentally used percent of hard tasks chosen instead of easy tasks.)

That's all my brain has ability to put here for now. Apologies if these have been discussed. I haven't read all of the preceding discussion.