chillier

Senior Member (Voting Rights)

I want to try and test some different measures of cognitive exertion and want to document some thoughts here that I might update later (or more likely leave blank as available energy dictates). This will be a bit of a ramble.

Commercial options for Eye Tracking

There was some discussion previously about measuring eye movements from a wearable pair of glasses as a proxy for cognitive work, but having looked around it's hard to find a commercial product that does this. Tobii is a company that makes gaze tracking (where your focus lies on a monitor) products for gaming and research, but their products are expensive and they are withholding about how much their glasses based product even costs (probably £300+ based on other products):

For counting saccades (eye movements), gaze detection isn't necessary so their set up of of 16 illuminators and 4 cameras probably is probably overkill.

Keyboard logging programs for windows

As a trivial cost proxy for measuring cognitive exertion I'm playing with some keyboard activity logging programs to see if button presses or mouse movement correlates. I've found a couple of ultra light weight ones for windows that might be useful.

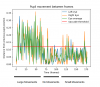

KeyCounter tallies minute by minute keyboard button presses (in red) and mouse movement distance (not shown), and provides the following plot (example of my day a couple days ago).

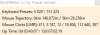

WinOMeter tallies keyboard presses, mouse trajectory, left middle and right mouse clicks, and scroll wheel usage and saves these values at the granularity of 1 day, which is probably more useful if you're planning to let this run in the background long term which I am.

Data Collection to assess suitability

To get a sense of whether this metric really makes sense I would like all the metrics of WinOMeter at a higher granularity of 10 minutes. I can't find a program that specifically does this so I've written a python script that launches whenever I turn on my computer and measures/writes all these features to a timestamped spreadsheet every 10 minutes. I am also keeping a log every 10 minutes of whether I feel my cognitive exertion has been very low - very high (highly subjective metric of course), and a note of the general type of activity I have been doing (reading, watching, coding etc). Happy to share the code if anyone would like.

The aim of all this being that at some point in the future if I have the energy we can see if there is a correlation of patterns of usage associated with exertion/ activity type. You would imagine for example a high exertion activity such as writing this comment would be highly corelated with keyboard presses, whereas something like browsing would involve more scrolling.

Drawbacks of this approach

I can think of lots of drawbacks: First being that it's no use if you don't use your computer much or switch between devices a lot. Personally I use my computer almost all of the time I am awake but this won't be true for many people. Some high exertion activities like reading an academic paper probably won't have much keyboard activity at all. Other specific circumstances might have the opposite problem, such as certain video games involving a lot of button mashing with not all that much thinking involved.

I am of course roughly aware of how much I have used my keyboard in each 10 minutes which could bias my perception of what is high and low mental exertion in my log.

More generally, what is and isn't cognitive exertion is extremely subjective and also relates to the familiarity to the activity you're doing. Driving a car for instance is probably a lot more mental work for a beginner than for someone experienced - but metrics of activity would likely be the same.

--------------------

I'm also playing with some saccade measuring stuff using a webcam or maybe infrared sensors strapped directly to glasses. It's very possible I won't update soon depending on health but we'll see.

Commercial options for Eye Tracking

There was some discussion previously about measuring eye movements from a wearable pair of glasses as a proxy for cognitive work, but having looked around it's hard to find a commercial product that does this. Tobii is a company that makes gaze tracking (where your focus lies on a monitor) products for gaming and research, but their products are expensive and they are withholding about how much their glasses based product even costs (probably £300+ based on other products):

For counting saccades (eye movements), gaze detection isn't necessary so their set up of of 16 illuminators and 4 cameras probably is probably overkill.

Keyboard logging programs for windows

As a trivial cost proxy for measuring cognitive exertion I'm playing with some keyboard activity logging programs to see if button presses or mouse movement correlates. I've found a couple of ultra light weight ones for windows that might be useful.

KeyCounter tallies minute by minute keyboard button presses (in red) and mouse movement distance (not shown), and provides the following plot (example of my day a couple days ago).

WinOMeter tallies keyboard presses, mouse trajectory, left middle and right mouse clicks, and scroll wheel usage and saves these values at the granularity of 1 day, which is probably more useful if you're planning to let this run in the background long term which I am.

Data Collection to assess suitability

To get a sense of whether this metric really makes sense I would like all the metrics of WinOMeter at a higher granularity of 10 minutes. I can't find a program that specifically does this so I've written a python script that launches whenever I turn on my computer and measures/writes all these features to a timestamped spreadsheet every 10 minutes. I am also keeping a log every 10 minutes of whether I feel my cognitive exertion has been very low - very high (highly subjective metric of course), and a note of the general type of activity I have been doing (reading, watching, coding etc). Happy to share the code if anyone would like.

The aim of all this being that at some point in the future if I have the energy we can see if there is a correlation of patterns of usage associated with exertion/ activity type. You would imagine for example a high exertion activity such as writing this comment would be highly corelated with keyboard presses, whereas something like browsing would involve more scrolling.

Drawbacks of this approach

I can think of lots of drawbacks: First being that it's no use if you don't use your computer much or switch between devices a lot. Personally I use my computer almost all of the time I am awake but this won't be true for many people. Some high exertion activities like reading an academic paper probably won't have much keyboard activity at all. Other specific circumstances might have the opposite problem, such as certain video games involving a lot of button mashing with not all that much thinking involved.

I am of course roughly aware of how much I have used my keyboard in each 10 minutes which could bias my perception of what is high and low mental exertion in my log.

More generally, what is and isn't cognitive exertion is extremely subjective and also relates to the familiarity to the activity you're doing. Driving a car for instance is probably a lot more mental work for a beginner than for someone experienced - but metrics of activity would likely be the same.

--------------------

I'm also playing with some saccade measuring stuff using a webcam or maybe infrared sensors strapped directly to glasses. It's very possible I won't update soon depending on health but we'll see.