Perhaps others can check but this seems like a contradiction to me. If you're rating the certainty of an important effect then this means not just any effect that is larger than 0, but an effect that is clinically significant and thus larger than the MID. So if you want to rate the certainty of that, you need to check of the confidence intervals in relation to the MID, not the null.

I think what you're saying here makes sense. I haven't looked into this thoroughly, but they cite "

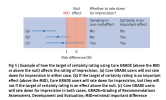

Core GRADE 2: choosing the target of certainty rating and assessing imprecision" (2025, BMJ), which states "The location of the point estimate of effect in relation to the chosen threshold determines the target. For instance, using the MID thresholds, a point estimate greater than the MID suggests an important effect and less than the MID, an unimportant or little to no effect. Users then rate down for imprecision if the 95% confidence interval crosses the MID for benefit or harm."

ie. so if the chosen threshold is the MID, and you're rating certainty in whether that effect is over that threshold, then you downgrade if the confidence interval crosses the MID.

That guidance does indeed say that the null can be used as a threshold, but that is only for determining if there is a treatment effect, not whether it is an important effect. It doesn't make sense, from what I see in the guidance, to use the MID as a threshold, and then use the null as the threshold for imprecision.

However, I think I may understand what they are trying to say. What they're saying seems to correspond to a somewhat unclear section in that guidance titled "Assessing whether a true underlying treatment effect exists (using null as threshold)", which is where the null is still the threshold chosen, but the MID is used to some extent to determine whether you're rating certainty in an "unimportant" effect, ie. one that is very close to the null, and therefore probably of negligible importance.

But, based on my understanding of this, if you use the null as the threshold, and the point estimate isn't close enough to the null to say you're rating an "unimportant" effect, you are still then just rating your certainty in a "true underlying treatment effect", which is not the same as rating certainty in an important effect. To do that, you would need to use the MID as your threshold (and so rate down for imprecision if the CIs cross the MID).