Evaluating working memory functioning in individuals with myalgic encephalomyelitis/chronic fatigue syndrome: a systematic review and meta-analysis

Penson, Maddison; Kelly, Kate

[Line breaks added]

Abstract

Individuals with myalgic encephalomyelitis/chronic fatigue syndrome (ME/CFS) frequently report pronounced cognitive difficulties, yet the empirical literature has not fully characterised how discrete components of working memory are affected. Given that working memory serves as a foundational system supporting complex cognitive processes, differentiating performance across verbal and visual modalities provides critical insight into which higher-order functions may be most vulnerable.

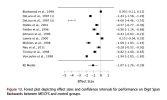

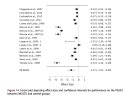

This systematic review/meta-analysis aimed to synthesise current research to investigate how ME/CFS impacts working memory systems. Using PRISMA guidelines, a systematic search of 6 databases was undertaken (MEDLINE, CINAHL, Web of Science Core Collection, PubMed, EMBASE and PsycINFO). Initially, 10 574 papers were imported and following screening 34 studies of good to strong quality met the inclusion criteria. A series of random effects models were utilised to analyse working memory.

Results indicated a significant difference and large effect size between ME/CFS individuals and controls on verbal working memory tasks; however, no significant difference in visual working memory performance was found between the groups. Following the breakdown of these subsystems into span/attentional control tasks and object/spatial tasks, these results remained consistent.

These findings contribute to the body of ME/CFS research by articulating where specific working memory deficits lie. Specifically, they show that individuals with ME/CFS have impaired verbal memory performance. This knowledge can guide future research targeting higher-order verbal cognition and underscores the importance of recognising cognitive manifestations within ME/CFS clinical care.

Web | DOI | Psychology, Health & Medicine | Paywall

Penson, Maddison; Kelly, Kate

[Line breaks added]

Abstract

Individuals with myalgic encephalomyelitis/chronic fatigue syndrome (ME/CFS) frequently report pronounced cognitive difficulties, yet the empirical literature has not fully characterised how discrete components of working memory are affected. Given that working memory serves as a foundational system supporting complex cognitive processes, differentiating performance across verbal and visual modalities provides critical insight into which higher-order functions may be most vulnerable.

This systematic review/meta-analysis aimed to synthesise current research to investigate how ME/CFS impacts working memory systems. Using PRISMA guidelines, a systematic search of 6 databases was undertaken (MEDLINE, CINAHL, Web of Science Core Collection, PubMed, EMBASE and PsycINFO). Initially, 10 574 papers were imported and following screening 34 studies of good to strong quality met the inclusion criteria. A series of random effects models were utilised to analyse working memory.

Results indicated a significant difference and large effect size between ME/CFS individuals and controls on verbal working memory tasks; however, no significant difference in visual working memory performance was found between the groups. Following the breakdown of these subsystems into span/attentional control tasks and object/spatial tasks, these results remained consistent.

These findings contribute to the body of ME/CFS research by articulating where specific working memory deficits lie. Specifically, they show that individuals with ME/CFS have impaired verbal memory performance. This knowledge can guide future research targeting higher-order verbal cognition and underscores the importance of recognising cognitive manifestations within ME/CFS clinical care.

Web | DOI | Psychology, Health & Medicine | Paywall