There is a huge problem in medicine in how they assess the validity of self-rated improvement, which doesn't come close to understanding just how difficult it is to assess.

I had a really hard lesson over this in the last 2 years. I have improved considerably. 2 years ago, I was bedbound. Mainly because of out-of-control POTS, which has improved a lot since. But even with this, I was much better on several aspects of ME/CFS than I had been the year before. Mainly in odd neurological symptoms, sound intolerance, and so on. I was able to hold basic conversations for the first time in probably 4-5 years.

Since then I have slowly improved, but it only made it so obvious just how hard it is to estimate those things. At some point I used to think I was maybe at a 20-30% range of overall capacity, but seeing how much I improved since then, and how much more there is to improve, made it very clear how this 20-30% range was ridiculously over-inflated, how it was more of a 5-6% range. There's too much to consider, it's like trying to guess the number of items in a jar when there are hundreds of thousands of them, and you can only view them from a single (misleading) angle. There's just no possible accuracy here.

It's basically unrealistic to guesstimate something as complex like this on a limited, and not even linear, single digit scale. It just flat out out doesn't work. There has to be metrics, standards and objective measures to compare to. Even the simple joke of using a banana for scale makes most size guesstimates more reliable than any standard health questionnaire I have seen applied to us.

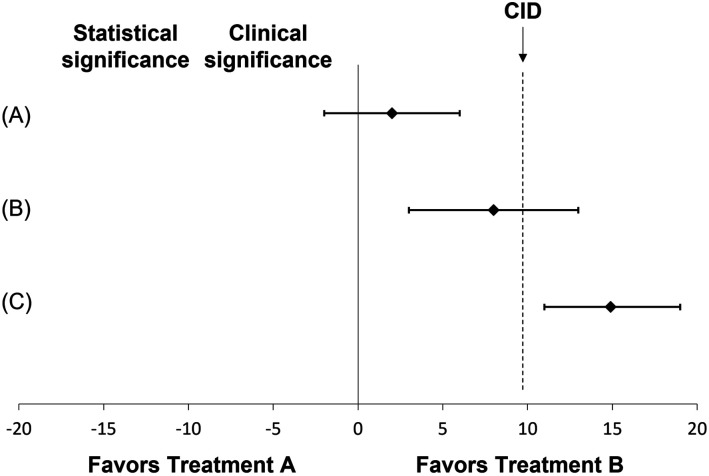

What health care professionals don't understand is that this isn't a problem for us, it's a problem, period. It's irreconcilable. There is no statistical analysis that can turn this mess of wildly inaccurate guesstimates into real practical numbers. They end up being so inaccurate in many cases it's actually worse than not trying to do that. Because they always do the thing where if the numbers look good, they are important and accurate, and if not, well they just have to figure out how to get the numbers just right. Whatever the numbers mean, which is usually both a number of unrelated things, and nothing at all. It just doesn't work like that at all.

But what it means more than anything is that none of those studies are reliable. Straight up not at all. They are not asking valid questions, making anything that comes after, analysis, interpretation, and so on, entirely arbitrary and useless for the most part.

What they are trying to do is the equivalent of doing chemistry without a reliable thermometer. It's just not possible. They could instead try to train a bunch of assistants at guessing the temperature of things by touch, and it would be exactly as useful as how questionnaires are misused in health care. They could show how most of the assistants usually have the same rough guesstimate ranges, and it still doesn't matter. They can show how multiple guesstimates made by the same person also tend to cluster near the same value, and it still doesn't matter: not accurate.

The root cause for much of this is something they will have to let go eventually: they confuse that the best they can do must be good enough, just because it's the best they can do. No, it's not. Especially not this. They even do the same thing with treatments that they poorly assess, here and usually, thinking that since this rehabilitation stuff is the best they can do, then it must be good enough. I don't know what logical fallacy this is, but it's probably the mother of all logical fallacies: I think X therefore I am right about X because me thinking X is the best I can do.